Start Training YOLO with Our Own Data

Related Articles:

- YOLO CPU Running Time Reduction: Basic Knowledge and Strategies

- Build Personal Deep Learning Rig: GTX 1080 + Ubuntu 16.04 + CUDA 8.0RC + CuDnn 7 + Tensorflow/Mxnet/Caffe/Darknet

- Recurrent YOLO for Object Tracking [Project Page][Arxiv][Github]

- SSD in MxNet with C++ test modules [Github], by my roomie [Zhi Zhang]

- LightTrack: Online Human Pose Tracking [Project Page][Arxiv][Github]

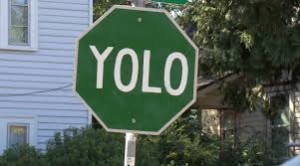

YOLO, short for You Only Look Once, is a real-time object recognition algorithm proposed in paper You Only Look Once: Unified, Real-Time Object Detection , by Joseph Redmon, Santosh Divvala, Ross Girshick, Ali Farhadi.

The open-source code, called darknet, is a neural network framework written in C and CUDA. The original github depository is here.

As was discussed in my previous post (in Chinese), the Jetson TX1 from NVIDIA is a boost to the application of deep learning on mobile devices and embedded systems. Many potentially inspiring products are approaching, one of which, to name with, is the real-time realization of computer vision tasks on mobile devices. Imagine the real-time abnormal action recognition under surveillance cameras, the real-time scene text recognition by smart glasses, or the real-time object recognition by smart vehicles or robots. Not excited? How about this, the real-time computer vision tasks on egocentric videos, or on your AR and even VR devices. Imagine you watch a clip of video shot by Kespry (What is this?) , you experience how Messi beat less than a dozen players and scored a goal. This can be used for educational purposes, where you stand in a player’s shoes, study how he/she observes the real-time circumstances and handles the ball. (If you are considering a patent, please put my name to the end of the inventors list.)

That being said, I assume you have at least some interest of this post. It has been illustrated by the author how to quickly run the code, while this article is about how to immediately start training YOLO with our own data and object classes, in order to apply object recognition to some specific real-world problems.

Here are two DEMOS of YOLO trained with customized classes:

Yield Sign:

Stop Sign:

The cfg that I used is here: darknet/cfg/yolo_2class_box11.cfg

The weights that I trained can be downloaded here: yolo_2class_box11_3000.weights

The pre-compiled software with source code package for the demo: darknet-video-2class.zip

You can use this as an example. The code above is ready to run the demo.

In order to run the demo on a video file, just type:

./darknet yolo demo_vid cfg/yolo_2class_box11.cfg model/yolo_2class_box11_3000.weights /video/test.mp4

If you would like to repeat the training process or get a feel of YOLO, you can download the data I collected and the annotations I labeled.

images: images.tar.gz

labels: labels.tar.gz

I have forked the original Github repository and modified the code, so it is easier to start with. Well, it was already easy to start with but I have so far added some additional niche that might be helpful, since you do not have to do the same thing again (unless you want to do it better):

(1). Read a video file, process it, and output a video with boundingboxes.

(2). Some utility functions like image_to_Ipl, converting the image from darknet back to Ipl image format from OpenCV(C).

(3). Adds some python scripts to label our own data, and preprocess annotations to the required format by darknet.

(…More may be added)

This fork repository also illustrates how to train a customized neural network with our own data, with our own classes.

1. Collect Data and Annotation

(1). For Videos, we can use video summary, shot boundary detection or camera take detection, to create static images.

(2). For Images, we can use BBox-Label-Tool to label objects.

2. Create Annotation in Darknet Format

(1). If we choose to use VOC data to train, use scripts/voc_label.py to convert existing VOC annotations to darknet format.

(2). If we choose to use our own collected data, use scripts/convert.py to convert the annotations.

At this step, we should have darknet annotations (.txt) and a training list (.txt).

Upon labeling, the format of annotations generated by BBox-Label-Tool is:

class_number

box1_x1 box1_y1 box1_width box1_height

box2_x1 box2_y1 box2_width box2_height

….

After conversion, the format of annotations converted by scripts/convert.py is:

class_number box1_x1_ratio box1_y1_ratio box1_width_ratio box1_height_ratio

class_number box2_x1_ratio box2_y1_ratio box2_width_ratio box2_height_ratio

….

Note that each image corresponds to an annotation file. But we only need one single training list of images. Remember to put the folder “images” and folder “annotations” in the same parent directory, as the darknet code look for annotation files this way (by default).

You can download some examples to understand the format:

3. Modify Some Code

(1) In src/yolo.c, change class numbers and class names. (And also the paths to the training data and the annotations, i.e., the list we obtained from step 2. )

If we want to train new classes, in order to display correct png Label files, we also need to moidify and rundata/labels/make_labels

(2) In src/yolo_kernels.cu, change class numbers.

(3) Now we are able to train with new classes, but there is one more thing to deal with. In YOLO, the number of parameters of the second last layer is not arbitrary, instead it is defined by some other parameters including the number of classes, the side(number of splits of the whole image). Please read the paper.

(5 x 2 + number_of_classes) x 7 x 7, as an example, assuming no other parameters are modified.

Therefore, in cfg/yolo.cfg, change the “output” in line 218, and “classes” in line 222.

(4) Now we are good to go. If we need to change the number of layers and experiment with various parameters, just mess with the cfg file. For the original yolo configuration, we have the pre-trained weights to start from. For arbitrary configuration, I’m afraid we have to generate pre-trained model ourselves.

4. Start Training

Try something like:

./darknet yolo train cfg/yolo.cfg extraction.conv.weights

If you find any problems regarding the procedure, contact me at gnxr9@mail.missouri.edu.

Or you can join the aforesaid Google Group; there are many brilliant people answering questions out there.

PS:

I also have a windows version for darknet available:

http://guanghan.info/projects/YOLO/darknet-windows.zip

But you need to use Visual Studio 2015 to open the project. Also note that this windows version is only ready for testing. The purpose of this version if for fast testing of cpuNet.

Here is a quick hand-on guide:

1. Open VS2015. If you don't have it, you can install it for free from the offcial microsoft website. 2. Open: darknet-windows\darknet\darknet.sln 3. Comiple 4. Copy the exe file from: darknet-windows\darknet\x64\Debug\darknet.exe to the root folder: darknet-windows\darknet.exe 5. Open cmd Run: darknet yolo test [cfg_file] [weight_file] [img_name] 6. The image will be output to: darknet-windows\prediction.png darknet-windows\resized.png

Recently I have received a lot of e-mails asking about yolo training and testing. Some of the questions are towards the same issue. Therefore, I picked some representative questions for this FAQ section. If you find a similar question here, you may have an answer for yourself right away. Since I am also a student of the darknet, if you find any of my answers erroneous, please comment below. Thanks!

FAQ:

Q:

Sorry about last email, I just re-read your Github and found you used (5 x 2 + 2) x 11 x 11 = 1452.

I have another confusion about the subdivisions, the author set subdivisions = 64, can you give me some clues about what this variable mean?

A:

In my understanding, if you have 128 images as a batch, for example, you batch update the weights upon processing 128 images.

And if you have subdivision to be set to 64, you have 2 images for each subdivision. For each division, you concatenate the ground truth image feature vectors into one and process it as a whole.

If you set subdivision to 2, the training is the fastest, but you see less results printed out.

Q:

– how to get the predicted bounding boxes coordinates, I am planning to write the complete results, detection class labels and the associated predicted bounding boxes coordinates in a flat text file. Which source file I should look into or modify to do this?

– when to stop training? – when I am training yolo it is not showing any information about the accuracy of the network?

– I am wondering how to test it on videos, because the test command takes an image at a time, so how to pass multiple images to yolo for testing?

A:

As of the bounding boxes, you can find it in [yolo_kernels.cu], in function “void *detect_in_thread(void *ptr)”.

If you want to control the training, just modify the configuration file. For example, in [yolo_2class_box11.cfg], change the max_batches and steps in line 14 and 12, respectively.

In order to test a video file, just type this in the terminal: ./darknet yolo vid_demo yolo_2class_box11.cfg yolo_2class_box11_3000.weights /video/test.mp4

Q:

I downloaded all your images and lables, modified my training_list.txt and start to train, but always ends with a ‘Couldn’t open file: /root/cnn/guanghan/darknet/scripts/labels/stopsign/rural_027.txt’ problem.

Each time says the different file that can not open.

A:

Please check if the folder [labels] and the folder [images] are in the same directory. I think this is the problem.

Q:

Yes, they are all in the darknet/scripts directory:

A:

Oh, you made a typo… It is “labels”, not “lables”. That’s the issue. >_<!

Q:

Thanks for your reply, Ning. I tried to repeat the training results of stopsign and yieldsign but looks like I am not able to get the right results.

- After downloading your images and annotations,

- I changed the line 218 output=588 and classes=2 in cfg/yolo-tiny.cfg. In your github website, yolo_2class_box11.cfg line 218 is output=1452. This is not correct for 2 classes, right?

- After execute the training, for several tries, the AOU=nan….

- Anyway, I waited for a couple of hours and get a 2000 iteration weight results.

- By testing on this video https://www.youtube.com/watch?v=OCaRH_C_USg with setting threshold=0, no bounding box show up.

Any comments? I am afraid something wrong at step 3 because everything is nan….

A:

- The second last layer is correct. For 11 by 11 splits of image (in order to be more accurate in small object recognition), the number of neurons at this layer should be: (5*2 + 2)* 11*11= 1452.

- During training, the “AOU= nan” sometimes occur. This is a little complicated to explain, but I will do my best to simplify the answer:

- First, try to reduce the learning rate to a smaller value, this usually helps.

- When the annotation data is not correct, by which I mean there exists a training image whose annotation is empty.

- If there is no object labeled, the code will try to update the weights at some point, with no actual data fed. This batch update will be nonsense, and ruin the current weights.

- But since you downloaded my data, this should not be the case.

- When the annotation data is correct, this sometimes happens because there is a hidden bug in darknet code.

- During training, the code tries to “randomly” sample image patches from the training image, as a way of data augmentation. If the bounding box is near the edge of the image, sometimes the sampled patch will cross the border. This invalid data will ruin the update of weights as well.

- One way to avoid this is to batch update the weights with many images instead of a single image, thus alleviate the effect.

- This is why in the cfg file I set subdivisions = 2 instead of default 64.

- If you use the tiny model, you should pre-train the model yourself or use darknet.conv.weights, rather than fine-tuning with the provided pre-trained model extraction.conv.weights. It is because the pre-trained model extraction.conv.weights had more layers than the tiny model.

Q:

I tried to add a 3rd class in the training, but somehow the test always gives me the first two. The 3rd class never showed up if I set the threshold to 0 or even -1.

Does your training code only works for 2 classes?

I changed the number of classes to 3 both in yolo.c and cfg.

A:

Have you checked out yolo_kernels.cu? You should set CLS_NUM to be 3 in there as well.

Q:

I see that the bounding boxes for the yieldsign and stopsign are not tight. Also the top left corner seems to be where it should be Is there any specific reason for the bounding box to be bigger than what it should be and the exactness in location for the top left corner?

A:

The image of YOLO is split to 7*7 partitions by default. In order to predict more precisely, you could modify the second last layer by setting it to be 11*11 partitions, for example. This only alleviates the problem but does not completely solve the problem, because the splits are still not arbitrary.

The fast rcnn can generate more precise detections because the bounding box can occur in arbitrary position, and that is why it is much slower than yolo.

Once thing we can do is to add a post-processing step to align the bounding box.

Q:

I wanted to ask what data you used for this..is this any public data you used, or did you annotate your own data..

how many frames did you have to train for each of these classes to get a descent performance?

A:

The data I used was downloaded from [Google Images], and hand-labeled by my intern employees. Just kidding, I had to label it myself. It is covered in the README how to label data.

Since I am training with only two classes, and that the signs have less distortions and variances (compared to person or car, for example), I only trained around 300 images for each class to get a decent performance. But if you are training with more classes or harder classes, I suggest you have at least 1000 images for each class.

Q:

Is it possible to share the data and the annotations you collected for me to get a feel of YOLO?. I am limited by resources and hence not able to test it on large datasets as of now. Also, it will be easy for me to comprehend your instructions with this data.

A:

I have uploaded the data that I trained for the demo, with the corresponding annotations. If you download them, you should be repeating the training process easily.

Here are the links: images.tar.gz , labels.tar.gz

Q:

I have successfully run your example, but when switched to running my data with 10 class (1000 images) I got an error “cannot load image”

I have checked the image file, link to image and open normally, Do you have any suggest ?

A:

I have encountered similar problems before. The solution to my problem was that I checked the txt file. It seems that there is a difference between Python2 and Python3 in treating spaces in text files. You cannot see the difference in the text file, though(unless you look at the char). I used Windows(python3) to generate the text file, and used Ubuntu(python2) to load the images, and that’s where I met this problem.

In short, the program failed to interpret and find the correct path.

Maybe you are having the same issue? Please check it out.

Leave a Reply

233 Comments on "Start Training YOLO with Our Own Data"

During training, the “AOU= nan” sometimes occur and ruin the training process. When bounding box is near the edge of the image, how can i avoid the sampled patch will cross the border and remove the invalid data?

Maybe rewriting the code and make some assertions will work. But for most cases, when “AOU= nan” occurs, it is not because the ground truth ROI is too adjacent to the edge, it is instead the result of inappropriate learning rate that leads to “broken” weights.

Hello Guanghan,

I would like to know how to save the video you have mention using yolov2. I can run the video but I want to save it as well.

How did you use the BBox-Label-Tool? I tried here and the images simply won’t load in the program. I don’t know what is the problem.

Have you read their script yet? Maybe you should try naming the image folder “1”, because that’s the default loading folder.

Hey, thank you. I just read the code and the problem was the size of the images. I add some lines to resize the image to 800×600, but this messed up with the annotations during the conversion, because the original image was not resized. In the end, I just manually resized the image and it worked.

Did you tried YOLO on Tx1 using camera input?

I have compilation of error for YOLO+Opencv3.0+Tx1 as explained in web below

https://groups.google.com/d/msg/darknet/AfZcD-C6yXY/Knr8iKmeAwAJ

Yes, I did try YOLO on Tx1, and it works fine. I have not encountered the problem you met, though. I noticed there is an answer in that Group post, have you tried that?

How long did it take you to train on 300 images for 1 or 2 classes? I am trying to train for a single class from VOC dataset and I am using Grid K520 from AWS EC2 g2x.large instance. It is taking like forever to train for 50 images. I have followed the steps from your blog and made modifications to yolo files. I am just wondering if this is normal or have I missed something.

Should I compute my own pre-train weights if I am trying to classify for just “car” class?

I have only 50 images in train.txt files.

Maybe you have too many epoches? You can just stop training, and use the intermediate weights. In my case, I used [yolo_2class_box11_3000.weights] in the example, which took less than an hour on TITANX to train.

I did that and used weights for 1000, 2000 but they are not giving good results. Is there some other metric to see like how much is MAP or some other error measure? I can see some numbers printed on the console and they are going down like 0.8 .. then 0.77 .. something like that.

From my knowledge and experience with nerual networks, an error measure is generally associated with the training and sometimes minimum error metric is used to stop training rather than number of training iterations.

Yes. The average loss is output after every epoch. If you set subdivision to 2, you can see it clearly. Otherwise the average IOU (intersection over union) for each subdivision are too many and you may miss the average loss that is also printed out.

I do not remember if this was default or something I coded for the printout of average loss. But if you are using the code I provided, it surely will output the average loss.

I noticed a mistake while preparing data using voc_label.py. I was doing a mistake in class label while preparing label files. Then I did some more digging and I found some lines in yolo.c which bugs me.

I found the same lines in your fork of darknet too. Some lines consists of reference to voc files. For e.g. in line 155 of yolo.c I found this:

list *plist = get_paths(“data/voc.2007.test”);

Will this not affect the outcome of the program for custom data?

No, this will not affect the outcome of the program for custom data.

The code you mentioned is for the validation of YOLO on VOC dataset. That function is never used in our scenario. In our case, we are using our own data to train classes that is different from VOC. And we do not have a common dataset to test our performance.

Hi Abhinav,

How long did you wait?

Hi! Guanghan I successfully downloaded your images and labels and make the source code from the darknet website wiht makefile set at GPU=1 OPENCV=0, but I encountered a problem when I started training,it always shows that segment error(core dumped)Is it because the image number are too small cause I only used 500+images for training,but changing the parameters batch and subdivisions to a small value in yolo.cfg also don’t works.

Help me…Thank you very much!

Do you have enough memory? I remember one of the pals who emailed me had the same problem. In his case, there was not enough memory of his laptop.

It is not a problem of the number of images used for training. Lacking training samples would certainly impair the performance, but should not bring this problem.

Sorry I meant increase the subdivisions in the first statement.

Can I train YOLO on a CPU for only detecting one single class??

Yes you can. I have a github repository for that.A configuration file and pre-trained weight is available too. Check out this post:

http://guanghan.info/blog/en/my-works/yolo-cpu-running-time-reduction-basic-knowledge-and-strategies/

Hi , can you write the steps for training with one class?

Hey,Ning,How to test yolo using python script,just like demo.py in fast-rcnn?

Somebody may have wrapped it up. But here is one way to go around it:

cmd = “./darknet yolo test cfg/cpuNet6.cfg cpuNet6.weights %s%s” % (app.config[‘UPLOAD_FOLDER’], filename)

try:

stdout_result = subprocess.check_output(cmd, shell=True)

Is your code saving intermediatery results like that of original yolo code?

I am training for one class using your code and I could not find backup weight files after 100 iterations.

I am wondering if I have made another mistake while setting up training files.

Where can I check in source files about this that after how many iterations its writing a weight file?

I found that it saved weight file for 600 iterations but there should be some way to change this. It may be quick on high GPU machines but on the AWS g2x large its fairly slow. It is hard to guess if someone has to wait till 600th iteration before one finds out that its working fine.

It is hard-coded by the author in “yolo.c”:

if(i%1000==0 || i == 600){

char buff[256];

sprintf(buff, “%s/%s_%d.weights”, backup_directory, base, i);

save_weights(net, buff);

}

You can change this as you like.

Hi Abhinav. Can you please tell me how you got it working. I also have 600,1000,2000etc weights saved in the backup folder and the training does not stop. Its been 8 hours and still continues?

Also the model did not start training until I gave the learning rate to be 0.000000000001 and batch size=64 and subdivision=8 for two classes.

Please let me know if I have to change something

I copy your code and model file ,and run the test”./darknet yolo test cfg/yolo_2class_box11.cfg yolo_2class_box11_3000.weights scripts/sign/images/stopsign/001.JPEG “,but I get no box.I wnat to know why?

How much time it took for anyone of you ? Can you please share including the GPU name.

thank you for your article.I hve succeed running YOLO in Linux system.I want to run the YOLO in windows system,have you ever succeeding running in windows system? wait for your reply!

Yes, I have succeeded running it in windows. Here is the code:

http://guanghan.info/projects/YOLO/darknet-windows.zip

But you need to use Visual Studio 2015 to open the project.

thank you for your guide!But when I open the “darknet-windows\darknet\darknet.sln”,there is no files in the darknet.sln.

Please tell me how to make a darknet.sln,and send me an e-mail if you have time.

thank you!My e-mail is 15184742190@163.com

I solve the problem,thanks a lot.

It took around 2-3 hours to get the provided weights, with a single TITAN-X GPU.

Do you mean the 2 classes 11×11 version? Did you use any sort of pre-training?

How can I fine tune only one layer, e.g. the last layer only ?

Any info on this Guanghan. Thanks

Hye Guys. is it possible to do text recognition using YOLO?

Seems that there is already a paper doing scene text detection with YOLO:

http://www.robots.ox.ac.uk/~vgg/data/scenetext/gupta16.pdf

Thanks for sharing the windows version. I was able to use your version of yolo-windows for vs2015 but I am having a hard time building opencv binaries for vs 2015. Can you please share a version of yolo-windows with pre-built opencv binaries for vs2015 ?

The training and demo functions are missing in the windows version you have shared. Kindly make a note of that in the description. It can only be run in “test” mode.

Is it possible to build windows version of darknet using visual studio 2012? If not then what is lacking in visual studio 2012?

I have seen another version of darknet which is working for visual studio 2012 and its built using opencv binaries as well. But this version has some issues and I am not able to run darknet in demo version.

I need to have a windows version built with opencv which is capable of running the demo version.

the yolo.weights is for 20 classes. If you want to use it to detect two out of 20 then you should not make any changes to the code and use it as it was. It can detect any objects present among those 20 classes and draw boxes.

The changes in the code is for the case when you have your own training data for any n number of classes and you want to train for that and obtain your own weights

[SOLVED] Hi! I solved it modifying the code and just taking the values that are relevant to my target classes.

Hi,I encountered the similar problem with you,can you tell me how you solved it ? Thank you very much.

I can’t run vid_demo using the source code that you have provided (darknet_video_2class.zip), there is no vid_demo file under the obj directory. Do you have another link to the vid_demo file?

vid_demo is not a file. Take a look at these two files: yolo.c and yolo_kernels.cu

Hi! YOLO is working and detects and draws the corresponding bounding boxes correctly. But also, it displays a big Bounding Box that does not correspond to any of the classes to be detected. Does anyone know why this big Bounding Box is drawn and where it comes from?

Hi! Do you have any alternative to BBox-Label-Tool??

I find this labelling tool easier to work with

https://github.com/tzutalin/labelImg

Thanks for letting us know!

Thanks very much!!

Using labelImg will generate a xml file but I don’t know how to convert this xml to yolo format. Could you please tell me how?

Yes, please provide the solution, how to use xml file generated by labelImg to train yolo. Waiting for answer. Thank you very much.

https://github.com/Cartucho/yolo-boundingbox-labeler-GUI

Hello? I have a question about the new class that had never been in yolo.c file. If a class is added up to the old class list (let’s say it is the ‘computer mouse’ class), does it detect the new class object as well as the old one?

Yes. If everything is done properly, it should.

Can i do more than 20 classes?

Of course you can. Remember to do the relevant code modifications.

but why when i test it, its only can detect 1 class?

i did changed the cluss number in yolo.c and yolo.kernel also changed the yolo.cfg classes and output.

Hi, nice work and thanks for sharing.

When I use darknet for recognition of cars, I have installed CUDA. I find it can’t adjust for OpenCV. I guess the reason is the version of it. So which version did you use?

Thanks in advance.

Hi, I was using Opencv2.4.9.

I was using Opencv2.4.8. ubuntu 14.0.4. But the error is “unknown type name ‘CvCapture’”.

Did you find any solution??

Ref: https://github.com/pjreddie/darknet/pull/38

Hi!

I have two questions:

What do you use to stabilize the Bounding Boxes and prevent them from flashing?

Do you do any sort of fine-tuning?

Thank you very much for your help.

Thanks for the answer!

Hi!

I try the training for two classes (stopsign and yieldsing) and everything is ok, the training is completed without errors but, when i used the weights the bounding boxes of both signals (stop and yield) have the same label “stopsign” (with good score)

Do you know why?

Futhermore i followed the steps in this page and i detected two issues (i think):

1) When i go to create the labels, if i don’t change “stop sign” by “stopsign” throw me a error.

2) In yolo.c in line 16 appears yeildsing instead of yieldsign.

Are you using the darknet-video-2class.zip code or are you cloning the github code and edited yourself? If it was the former case, there should not be a problem in testing, and if it was the latter case, there must be somewhere you did not do correctly. Have you generated the labels to be displayed upon detection?

Thanks for answer Ning!! I’m using the clone github and i created the labels before the training. It’s curious because when i perform a detection with a photo of yield sign, in the terminal appears (for example) “stopsign: 0.88” and in the bounding box appears “stopsign”. I have tried with photos of yieldsign from the training data and the result is the same, as if the class yieldsign don’t exist and have been merged into a unique class “stopsing”…futhermore i cant use the darknet-video-2class because i have not permissions even with sudo.

Thank you once again!

Hi!

I have the exact same problem as Alfonso. I’m only detecting stopsigns.

Does anybody have a solution for this ?

I figured out my problem. My label files all began with zeros. I had to change that integer to match the class #.

Having the exact same issue with a custom training on 7 classes. It’s accurately boxing the object but is only labeling everything with the first class!

I figured out my problem. My label files all began with zeros. I had to change that integer to match the class #.

are you planning to add the training stage to the windows version that you built?

I am very sorry– recently I am busy doing further research and have no time adding the training stage.

list *plist = get_paths(“/home/pjreddie/data/voc/2007_test.txt”);

What should this be replaced with in the code “src/yolo.c”? (mentioned in your 3rd point)

thanks you!

The .weights file is not required to generate a new .weights file for new classes, I guess. In case of replacing all classes darknet has, command arguments ‘darknet.exe yolo train myCFG.cfg” will be fine. If this is wrong, please let me know.

“The .weights file is not required to generate a new .weights file for new classes”. It’s true. Use an existing weight file only when you want to finetune weights on that. For your novel architecture, you can train it from scratch.

Hi, how can i get the mAP, i know “void validate_yolo_recall(char *cfgfile, char *weightfile)”. But how do i calculate with this output the mAP?

my output of the validation process

…

812 964 1094 RPs/Img: 30.79 IOU: 72.07 Recall: 88.12

813 965 1095 RPs/Img: 30.78 IOU: 72.07 Recall: 88.13

814 968 1098 RPs/Img: 30.80 IOU: 72.07 Recall: 88.16

Hi,

I am trying to train the machine with PASCAL VOC 2007 and 2012 dataset. However, the process is running forever. Can someone tell me how much time does it require to train?

GPU: Quadro k2200 GPU

batchsize in yolo.train.cfg is 128

subdivision is set to 128.

If I use any other size combination like 64 and 2 or 128 and 2, I am getting a cuda out of memory error.

Any help will be appreciated.

Thanks,

Hi,

By now, you may have figured it out. but just in case you haven’t, read this (https://groups.google.com/d/msg/darknet/fdkf4tbr-e4/FZq_U2mhBQAJ)

so if you run out of memory, reduce batch size to 64

and then try to increase your subdivisions (starting from 2) by the power of 2.

train with the smallest subdivision number that does not cause any error

Read my comment below. The source codes from Darknet has been altered by original author for some reason. It won’t read it right without making corrections in parser.c and also won’t write any good weights file without making corrections both in utils.c and parser.c. That’s why it shows NaN.

I have a question; why did you use FILE *fp = fopen(filename, “w”) in line 612 and FILE *fp = fopen(filename, “r”) in line 723 in parser.c? Even though your pre-trained weights (yolo_2class_box11_3000.weights) only can be accessed if “rb” is used for line 723. And did you save the weights file with “w” option or “wb” option? Thanks.

what changes are necessary in the file “utils.c”?

I’m trying to train YOLO on my own data on CPU

but i got a message that the training was killed , any ideas about it?

https://groups.google.com/forum/#!topic/darknet/M63bCX4Gygg

I have the same problem. have you solved this?

Hi,Ning.I have some questions about the yolo paper.

Why it divides the image into 7 * 7 grids,and why each grid cell predicts B bounding boxes.

Why can’t we just use 1 * 1 grid to predict B bounding boxes ?

if it’s 1*1 grid, you can’t detect smaller object.

Is it possible to train a detector with images that have more than 3 channels? Like RGBA images

i don’t see why not. but in order to do so, you have to change the network little bit.

maybe the first layer filter since it is designed to conv with 3channel images.

After putting the data on traning the following error is coming after a week,note i am running darknet on cpu,voc data 2007 and 2012….can any one help

/home/tanvir/darknet/VOCdevkit/VOC2007/JPEGImages/004297.jpg

Cannot load image “/home/tanvir/darknet/VOCdevkit/VOC2007/JPEGImages/004297.jpg”

STB Reason: can’t fopen

I have the same problem. Im training YOLO for 196 classes.

check if you have image at given path.

if everything is ok and you can open the image fine, then it may be the encoding problem.

i once copied the text file from windows and encountered similar problem.

i solved mine by rewriting all text file in ubuntu with UTF-8 encoding.

The UTF-8 didn’t help, but your post gave me the idea to check for similar things. I was editing the text file in notepad++ on windows, so it used the Windows CR LF system, changed it to Unix LF and it worked! thanks!!

嗨 你的文章写的真棒!我的环境是ubuntu16.04+gcc 4.9和5.4+CUDA 7.5 + GTX1080,但是遇到一个问题,OPENCV=1的时候可以,但是GPU=1的时候报错,是编译错误:

……

/usr/local/cuda/include/surface_functions.h(484): error: expected a “;”

/usr/local/cuda/include/surface_functions.h(485): error: expected a “;”

Error limit reached.

100 errors detected in the compilation of “/tmp/tmpxft_000001b4_00000000-7_softmax_layer_kernels.cpp1.ii”.

Compilation terminated.

Makefile:55: recipe for target ‘obj/softmax_layer_kernels.o’ failed

make: *** [obj/softmax_layer_kernels.o] Error 4

请问这个错误怎么解决呢?

Haoran Liu, I had similar errors when trying to build the original DarkNet with OpenCV3. Check if you can build with GPU, CUDNN and OPENCV set to 0 in Makefile. If that works, you may try the patch from Prabindh Sundareson and make sure you set the path variables etc correctly, I’ve posted my steps here: https://covijn.com/2016/11/fixing-darknet-opencv3-make-error-convolutional_kernels/

Why would you provide all of the data to retrain the model and not provide us the video file to test it?

Also Why would anyone store their videos here? “/video/test.mp4” it should be “video/test.mp4”

for your first question or grumble, i believe google can provide you a test video very easily.

(I believe it might have taken less time to google any test video than writing your complaints here)

second, i believe he(author) made a typo.

Is there any way to supply variable size input images to YOLO for training and testing? Obviously resizing to a fixed size is one option. Is there any other way to get around it?

Thanks in advance for helpful comments.

as far as i know, you can’t have images of different sizes for conv net for training. Maybe there is a way to do so, but i see no point of doing it. why do you want to do so? I want to know about your idea.

for testing, you can provide images with various size

Is it possible to train on different size images?

The images provided for stopsign and yeildsign are of variable size.

If its not possible to train on different size images , can I convert the annotation data to correspondinly size images?

I dont want to label images again

when you start training, it read all the images and then automatically resize all images to 448×448 (or according to your setting in cfg)

so example images (stopsign/yieldsign) will be resized before it gets fed to network.

i am not sure what annotation data you’re talking about, but once you convert labeling data using convert.py, it only contains ratio information. so it doesn’t really matter if you resize images to different size at training time.

Ning, many thanks for providing your solution here. I’m running your pre-compiled version on Ubuntu 16.04 trying to feed it my own .mp4 video file and it returns the following error:

./darknet yolo demo_vid cfg/yolo_2class_box11.cfg [my_weights] [my_video.mp4]

/skip/

32: Detection Layer

forced: Using default ‘0’

Loading weights from myyolo.weights…Done!

Video File name is: big_buck_bunny_720p_2mb.mp4

./darknet: symbol lookup error: ./darknet: undefined symbol: _ZN2cv12VideoCaptureC1ERKSs

do you have any recommendations on how to resolve this? The only modification I had to do is copy libraries libcudart, libcublas, libcurand from their 7.5 versions to 7.0 as I have later version of CUDA installed and your pre-compiled requires 7.0.

please disregard the above question as I was able to compile from sources without problems

30: Connected Layer: 12544 inputs, 539 outputs

31: Assertion failed: (side*side*((1 + l.coords)*l.n + l.classes) == inputs), function make_detection_layer, file ./src/detection_layer.c, line 27.

Abort trap: 6

I have only one class to train. So , mu output according to your formula is 539. WHy am I getting the assertion error ?

you have to change the value of ‘side’ accordingly.

in your case, it seems that you wanted to do 7*7*(5*2+1)=539

make sure you have set ‘side’ value to 7

31: Assertion failed: (side*side*((1 + l.coords)*l.n + l.classes) == inputs), function make_detection_layer, file ./src/detection_layer.c, line 27.

I keep getting this error , I have changed the output to 1452 and Num of classes to 2 ..

I’,m using your data of images and stopsign.

Make sure if ‘side == 11’

Couldn’t open file: /data/voc/train.txt

When I run the training code , it references this file.

Icouldnt fin in the code where to modify this path?

you can modify trainlist.txt file path in yolo.c

or you can just put your trainlist file in specified directory.

/data/voc/ in your case

I did this char (I set in yolo.cfg: char *train_images = “/home/osboxes/Desktop/yolo-test/train.txt”; char *backup_directory = “/home/osboxes/Desktop/yolo-test/backup/”;). But it still asks about /data/voc/train.txt because it’s written in cfg/voc.data: “train = /home/pjreddie/data/voc/train.txt”

but example from this site doesn’t mention anything about cfg/voc.dat?

What I’m doing wrong?

hello. I followed the training procedure for roadsign detection, using training images and label file that you have provided. However, after the first iteration, I am getting “Avg IOU : nan” for all images. I checked all possible issues but still not able to solve this problem.

Were you able to fix this problem?

I do not know exactly what’s happening this problem. but i trained mine with smaller model and smaller learning step. try train with small or tiny model. and have your learning rate low. when you change your learning rate in cfg file, change steps and scales as well. if the first scale is too big, try to make it low.

I am having same issue, all images box(x,y,w,h) = (nan, nan, nan, nan) and detection IOU : nan also. Any idea why? I followed all steps and using same data and labels as given

Hi there, I followed the provided example to reproduce the training of a net for stop & yield sign detection. The training started from scratch. The cfg file used is yolo_2class_box11.cfg. However, the loss remained around 1.75 after 13000 iterations, like this:

13875: 1.657367, 1.751951 avg, 0.005000 rate, 7.913005 seconds, 888000 images

This model cannot correctly detect the signs at all… It looks like the loss cannot decrease efficiently. Using the provided trained model, the loss is around 0.4, and the model produces correct detection.

Can anyone help? Anything I missed here? Many thanks in advance.

I got my loss stuck around 1.43 like you. I took weight file in which loss hasn’t reach 1.43 and continued train with smaller model. i started with yolo_2class_box11.cfg and then i changed the model to tiny and continued training it. and i got pretty fine result.

oh and also playing with learning rates would help also. i tried with many different learning rates.

I think being stuck at certain loss has something to do with dead neuron. I think we need more study and experience.

Sorry, May you explain for me the meaning of the relation between subdivision and batch size? Because I am reading code of YOLO to make a comprehensive evaluation for my thesis and I don’t know the relation of 2 parameters. Thanks!

batch size : how many images you will load to memory.

subdivision: how many time you will divide those images in a batch to upload.

so, you are actually loading batch/subdivision images at once.

so if you increase batch size, you are analyzing more images in order to make an update. so update accuracy goes up at the cost of more training time.

i think subdivision is for the case of memory problem. if you encountered memory issue, try to increase subdivision by power of 2. and use the number that does not cause memory problem

What is the “Count” in the training data?

Detection Avg IOU: 0.000000, Pos Cat: 413.734467, All Cat: 310.563416, Pos Obj: -27.928844, Any Obj: -22.873415, count: 32

It takes over 15-20 minutes for each image to train. I feel like it is too much time. How much time should it take for training on the stopsign data?

Hi, is there any chance I can reproduce your results using yolo-small.cfg ? I have an old gtx670 with only 2gb of VRAM, thus I cannot use your .cfg file.

you can reduce batch size or increase subdivision in order to make it work with your GPU.

go head and edit those part in your cfg file and then give it a try.

of course, smaller model will do the work as well

thx for your reply. Seems I cannot reduce the size enough by tweaking the parameters. But its very good to know that I can use the small model too. Given your reply to Yasha, will I also get somewhat reasonable results with the tiny model (and save me a lot of time)?

i don’t know how much accuracy you need from YOLO, but i was able to get pretty satisfying result with tiny model.

from what i remember, tiny model required around 2.xx gb of the memroy, so you may need to increase subdivision parameter by the power of two starting from 2 and then choose the one that doesn’t cause memory problem.

good luck.

Actually, that worked out quite fine: 2000 repetitions with a batchsize of 64 (subdivision 4)

already yielded good results. I trained it up till 10500 reps, but the gain was rather minor I would say. I will now work with stop + yieldsign, and then start with my own stuff. Thx for your help!

my pleasure

Enjoy learning machine learning

Hi.

I’m not 100% sure, but you could check the followings

1. make sure you have compiled with GPU enabled (GPU=1 in your make file)

2. set proper number for gpu-architecture & gpu-code (they are also in makefile)

if you are using GTX1080(pascal) both should be 61

let me know how it goes

Thank you for the solution.. However, in my case, it doesn’t work… Still I get the same Segmentation fault error…

Segmentation fault also tends to happen when you don’t have enough memory. How much RAM do you have?

By now, you may have figured it out. But just in case you’re still struggling, read followings:

i happened to encounter same problem yesterday.

Mine was due to misspelling in cfg file.

accidentally added comma(,) or # of steps and scales doesn’t match.

please check your cfg file.

Hello! Thank you for explaining and sharing the way how to train YOLO. When I try to train or validate, i get ‘segmentation fault (core dumped)’ error.. I used same cfg, src, weights files on this website for trying YOLO with stop and yieldsign detection. I am using Ubuntu14.04, GTX1080, CUDA8.0, also with RAM 16G.

Win2888

Actually, that worked out quite fine: 2000 repetitions with a batchsize of 64 (subdivision 4)

already yielded good results. I trained it up till 10500 reps, but the gain was rather minor I would say. I will now work with stop + yieldsign, and then start with my own stuff. Thx for your help!

mơ thấy rắn

First of all, thanks a lot for your blog, it is extremely helpful.

Original YOLO tiny contains 20 classes. Is it possible to use the weights of the original model for a training of a different amount of classes? I guess that the difference should be only in the last layer. If not, do you have an idea of how to transfer the weights of the first N-1 layers?

Thanks