LightTrack: A Generic Framework for Online Top-Down Human Pose Tracking |

| Guanghan Ning, Heng Huang |

|

LightTrack: A Generic Framework for Online Top-Down Human Pose Tracking |

| Guanghan Ning, Heng Huang |

|

| Human Pose Tracking, Visual Object Tracking, Siamese Graph Convolutional Networks, Re-ID |

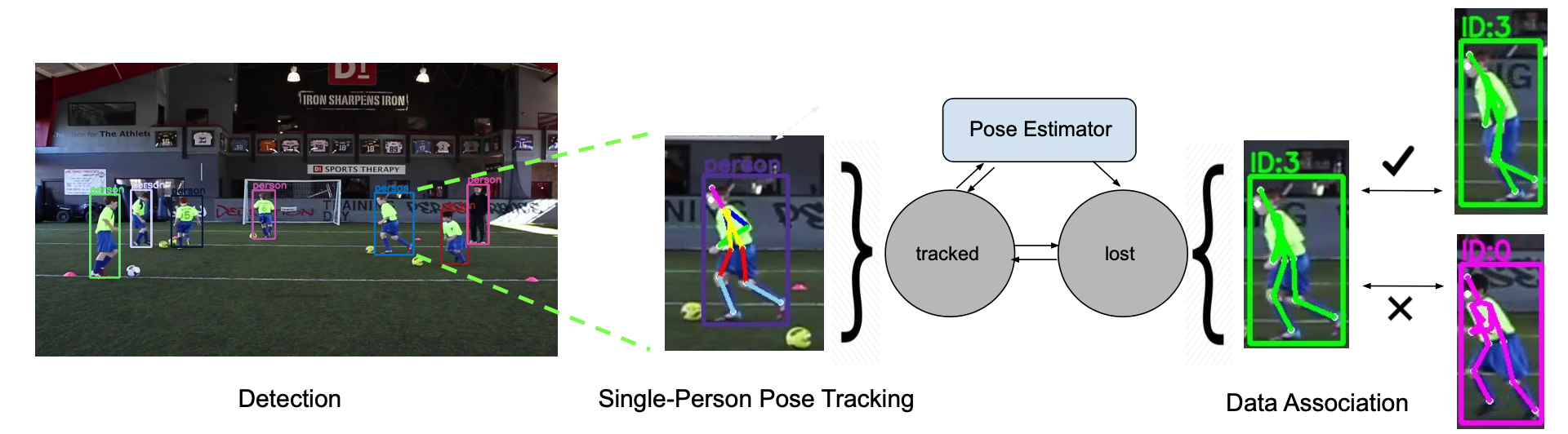

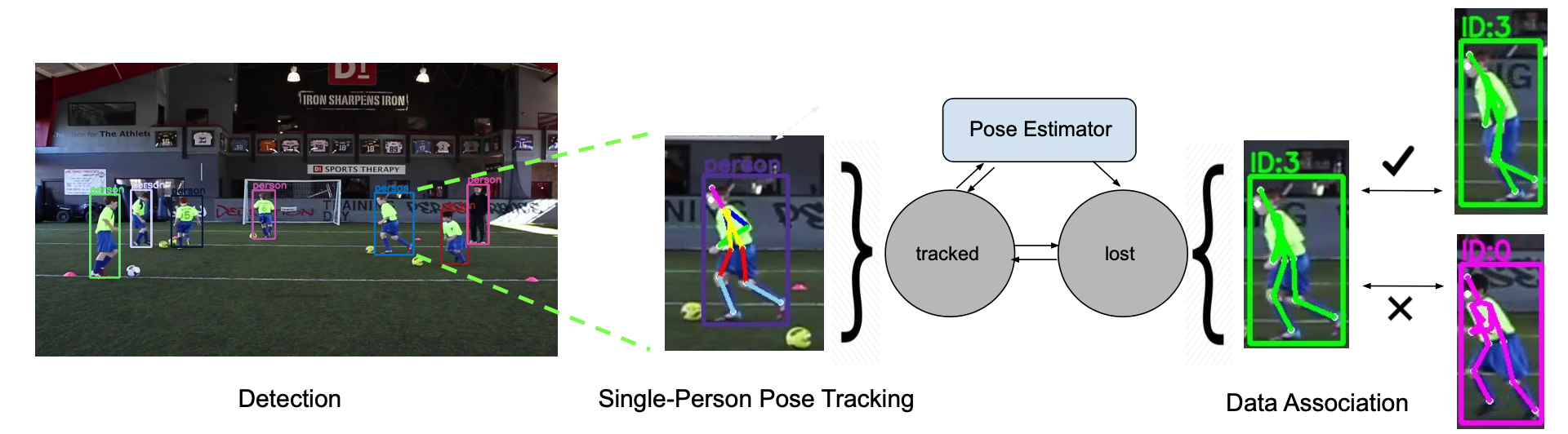

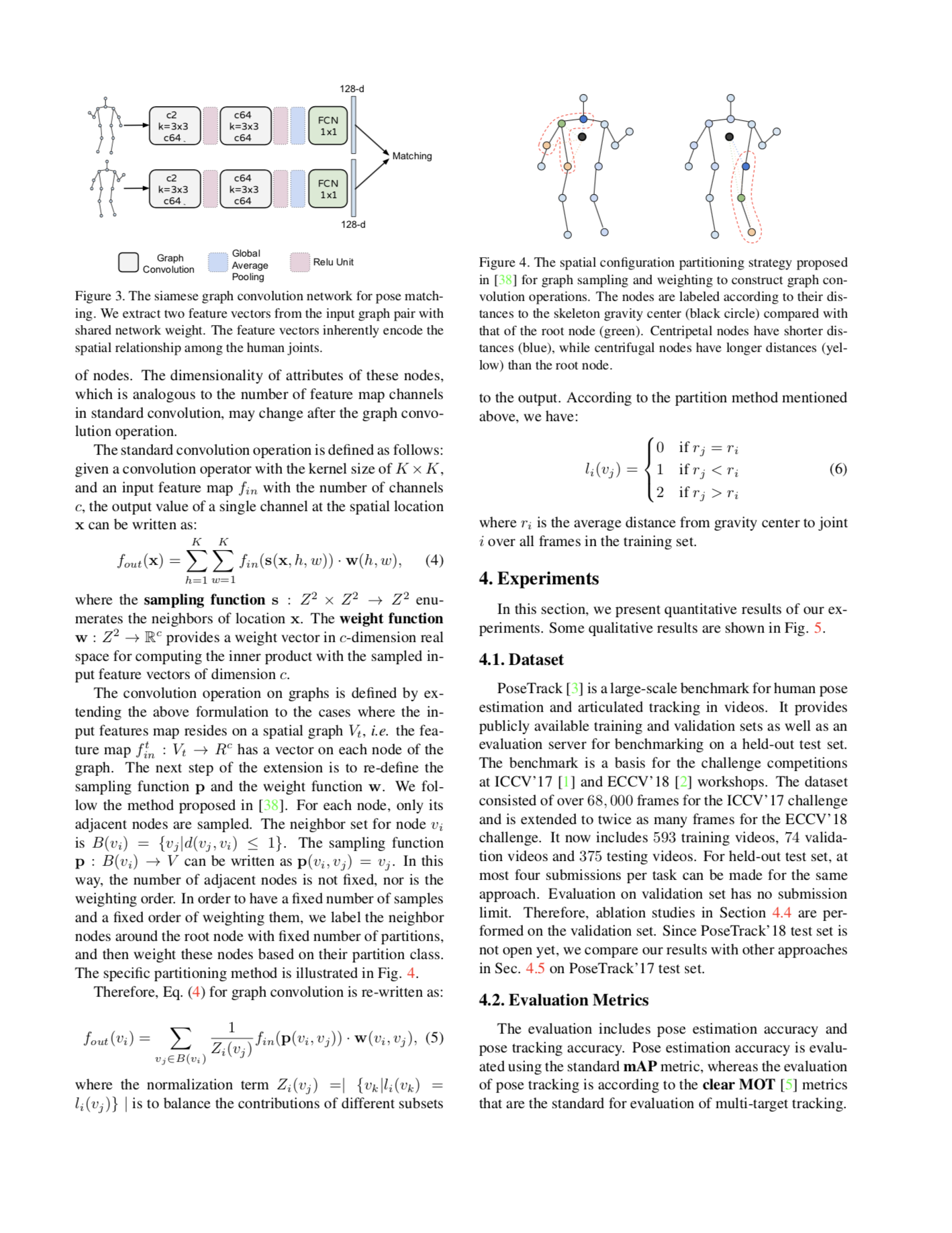

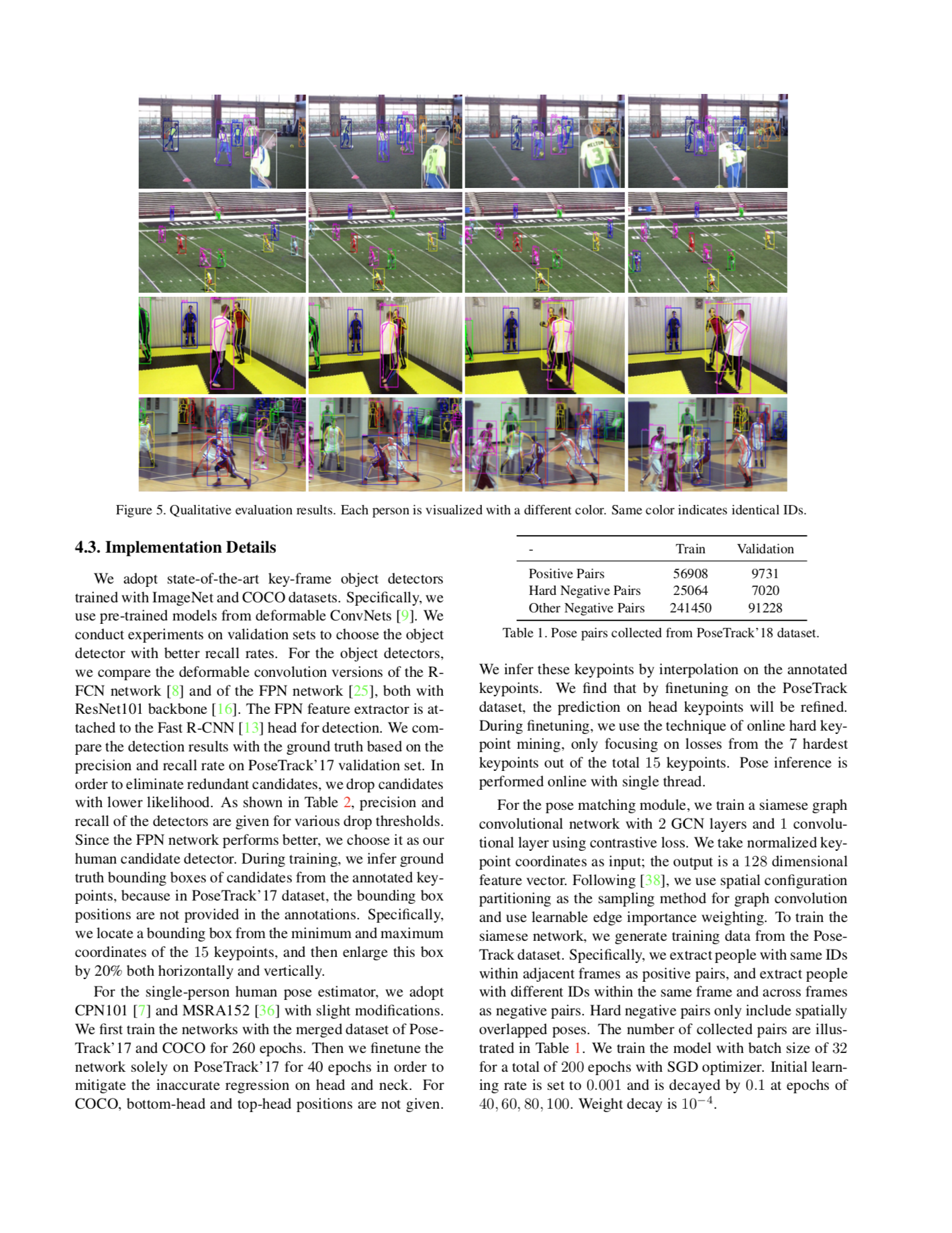

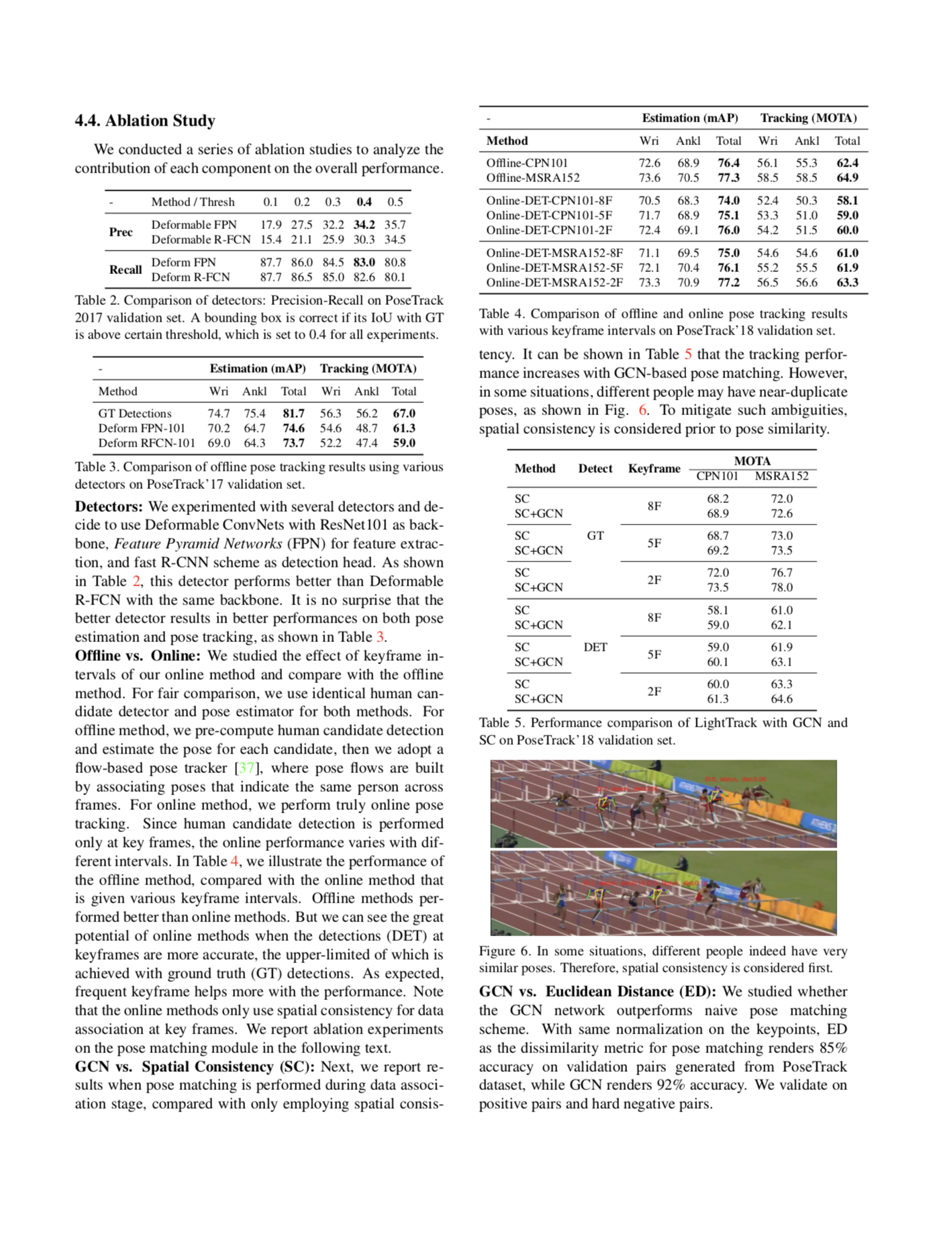

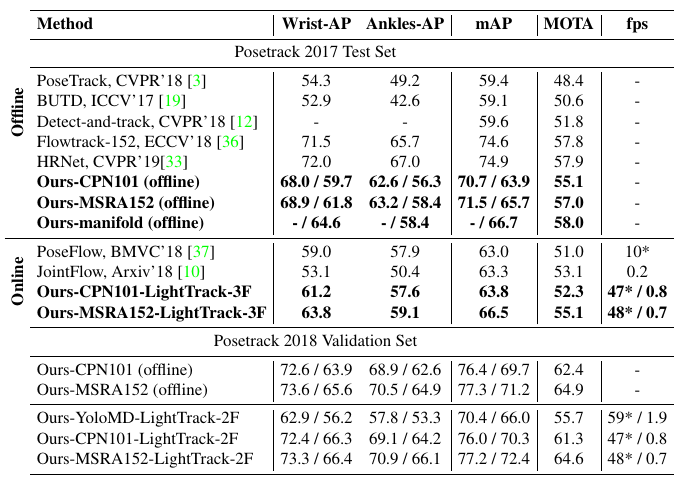

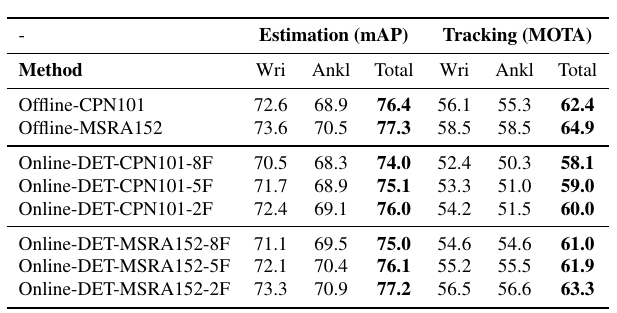

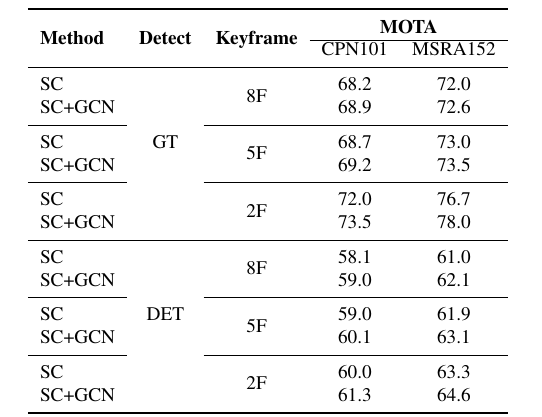

| In this paper, we propose a novel effective light-weight framework, called as LightTrack, for online human pose tracking. The proposed framework is designed to be generic for top-down pose tracking and is faster than existing online and offline methods. Single-person Pose Tracking (SPT) and Visual Object Tracking (VOT) are incorporated into one unified functioning entity, easily implemented by a replaceable single-person pose estimation module. Our framework unifies single-person pose tracking with multi-person identity association and sheds first light upon bridging keypoint tracking with object tracking. We also propose a Siamese Graph Convolution Network (SGCN) for human pose matching as a Re-ID module in our pose tracking system. In contrary to other Re-ID modules, we use a graphical representation of human joints for matching. The skeleton-based representation effectively captures human pose similarity and is computationally inexpensive. It is robust to sudden camera shift that introduces human drifting. To the best of our knowledge, this is the first paper to propose an online human pose tracking framework in a top-down fashion. The proposed framework is general enough to fit other pose estimators and candidate matching mechanisms. Our online method outperforms other online methods while maintaining a much higher frame rate, and is very competitive with our offline state-of-the-art. |

| The code and models are publicly available at GitHub. |

paper

slides

paper

slides

|

| We released the training and testing code, together with the pre-trained models at GitHub. |

|

| [1] | Chen, Yilun, et al. "Cascaded pyramid network for multi-person pose estimation." CVPR (2018). |

| [2] | Xiao, Bin, Haiping Wu, and Yichen Wei. "Simple baselines for human pose estimation and tracking." ECCV (2018). |

| [3] | Ning, Guanghan, et al. "A top-down approach to articulated human pose estimation and tracking". ECCVW (2018). |

| [4] | Sun, Ke, et al. "Deep High-Resolution Representation Learning for Human Pose Estimation." CVPR (2019). |

| [5] | Xiu, Yuliang, et al. "Pose flow: efficient online pose tracking." BMVC (2018). |

| [6] | Dai, Jifeng, et al. "Deformable convolutional networks." ICCV (2017). |